WARNING: Logging before InitGoogle() is written to STDERR

I0000 00:00:1673716544.649557 269 common_lib.cc:145] Failed to fetch URL on try 1 out of 6: Timeout was reached

I0000 00:00:1673716555.150005 269 common_lib.cc:145] Failed to fetch URL on try 2 out of 6: Timeout was reached

I0000 00:00:1673716565.651888 269 common_lib.cc:145] Failed to fetch URL on try 3 out of 6: Timeout was reached

I0000 00:00:1673716576.152164 269 common_lib.cc:145] Failed to fetch URL on try 4 out of 6: Timeout was reached

I0000 00:00:1673716586.653446 269 common_lib.cc:145] Failed to fetch URL on try 5 out of 6: Timeout was reached

I0000 00:00:1673716597.153993 269 common_lib.cc:145] Failed to fetch URL on try 6 out of 6: Timeout was reached

Failed to get agent-worker-number with error: Timeout was reachedI0000 00:00:1673716607.655526 269 common_lib.cc:145] Failed to fetch URL on try 1 out of 6: Timeout was reached

I0000 00:00:1673716618.155990 269 common_lib.cc:145] Failed to fetch URL on try 2 out of 6: Timeout was reached

I0000 00:00:1673716628.657651 269 common_lib.cc:145] Failed to fetch URL on try 3 out of 6: Timeout was reached

I0000 00:00:1673716639.157863 269 common_lib.cc:145] Failed to fetch URL on try 4 out of 6: Timeout was reached

I0000 00:00:1673716649.659285 269 common_lib.cc:145] Failed to fetch URL on try 5 out of 6: Timeout was reached

I0000 00:00:1673716660.159948 269 common_lib.cc:145] Failed to fetch URL on try 6 out of 6: Timeout was reached

I0000 00:00:1673716670.661610 269 common_lib.cc:145] Failed to fetch URL on try 1 out of 6: Timeout was reached

I0000 00:00:1673716681.162626 269 common_lib.cc:145] Failed to fetch URL on try 2 out of 6: Timeout was reached

I0000 00:00:1673716691.664552 269 common_lib.cc:145] Failed to fetch URL on try 3 out of 6: Timeout was reached

I0000 00:00:1673716702.164761 269 common_lib.cc:145] Failed to fetch URL on try 4 out of 6: Timeout was reached

I0000 00:00:1673716712.666079 269 common_lib.cc:145] Failed to fetch URL on try 5 out of 6: Timeout was reached

I0000 00:00:1673716723.166580 269 common_lib.cc:145] Failed to fetch URL on try 6 out of 6: Timeout was reached

I0000 00:00:1673716733.667981 269 common_lib.cc:145] Failed to fetch URL on try 1 out of 6: Timeout was reached

I0000 00:00:1673716744.168007 269 common_lib.cc:145] Failed to fetch URL on try 2 out of 6: Timeout was reached

I0000 00:00:1673716754.668983 269 common_lib.cc:145] Failed to fetch URL on try 3 out of 6: Timeout was reached

I0000 00:00:1673716765.169728 269 common_lib.cc:145] Failed to fetch URL on try 4 out of 6: Timeout was reached

I0000 00:00:1673716775.671754 269 common_lib.cc:145] Failed to fetch URL on try 5 out of 6: Timeout was reached

I0000 00:00:1673716786.172612 269 common_lib.cc:145] Failed to fetch URL on try 6 out of 6: Timeout was reached

I0000 00:00:1673716796.673994 269 common_lib.cc:145] Failed to fetch URL on try 1 out of 6: Timeout was reached

I0000 00:00:1673716807.174525 269 common_lib.cc:145] Failed to fetch URL on try 2 out of 6: Timeout was reached

I0000 00:00:1673716817.676271 269 common_lib.cc:145] Failed to fetch URL on try 3 out of 6: Timeout was reached

I0000 00:00:1673716828.177038 269 common_lib.cc:145] Failed to fetch URL on try 4 out of 6: Timeout was reached

Exception in device=TPU:1: tensorflow/compiler/xla/xla_client/mesh_service.cc:329 : Check failed: impl_->channel->WaitForConnected( std::chrono::system_clock::now() + std::chrono::seconds(connect_wait_seconds))

*** Begin stack trace ***

tsl::CurrentStackTrace()

xla::service::MeshClient::MeshClient(std::string const&)

xla::service::MeshClient::Get()

xla::ComputationClient::Create()

xla::ComputationClient::Get()

PyCFunction_Call

_PyObject_MakeTpCall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyObject_GenericGetAttrWithDict

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

PyVectorcall_Call

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

PyVectorcall_Call

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyObject_FastCallDict

_PyObject_Call_Prepend

_PyObject_MakeTpCall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

PyEval_EvalCodeEx

PyEval_EvalCode

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

PyVectorcall_Call

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyObject_MakeTpCall

PyVectorcall_Call

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

PyEval_EvalCodeEx

PyEval_EvalCode

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

PyVectorcall_Call

Py_RunMain

Py_BytesMain

*** End stack trace ***

Failed to connect to client mesh master: 785db6c3ea80:57619

Traceback (most recent call last):

File "/usr/local/lib/python3.8/site-packages/torch_xla/distributed/xla_multiprocessing.py", line 331, in _mp_start_fn

start_fn(index, pf_cfg, fn, args)

Exception in device=TPU:2: tensorflow/compiler/xla/xla_client/mesh_service.cc:329 : Check failed: impl->channel->WaitForConnected( std::chrono::system_clock::now() + std::chrono::seconds(connect_wait_seconds))

*** Begin stack trace ***

tsl::CurrentStackTrace()

xla::service::MeshClient::MeshClient(std::string const&)

xla::service::MeshClient::Get()

xla::ComputationClient::Create()

xla::ComputationClient::Get()

PyCFunction_Call

_PyObject_MakeTpCall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyObject_GenericGetAttrWithDict

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

PyVectorcall_Call

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

PyVectorcall_Call

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyObject_FastCallDict

_PyObject_Call_Prepend

_PyObject_MakeTpCall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

PyEval_EvalCodeEx

PyEval_EvalCode

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

PyVectorcall_Call

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyObject_MakeTpCall

PyVectorcall_Call

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

PyEval_EvalCodeEx

PyEval_EvalCode

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

PyVectorcall_Call

Py_RunMain

Py_BytesMain

*** End stack trace ***

Failed to connect to client mesh master: 785db6c3ea80:57619 File "/usr/local/lib/python3.8/site-packages/torch_xla/distributed/xla_multiprocessing.py", line 324, in _start_fn

_setup_replication()

File "/usr/local/lib/python3.8/site-packages/torch_xla/distributed/xla_multiprocessing.py", line 316, in _setup_replication

device = xm.xla_device()

File "/usr/local/lib/python3.8/site-packages/torch_xla/core/xla_model.py", line 245, in xla_device

devices = get_xla_supported_devices(devkind=devkind)

Traceback (most recent call last):

File "/usr/local/lib/python3.8/site-packages/torch_xla/core/xla_model.py", line 139, in get_xla_supported_devices

xla_devices = _DEVICES.value

File "/usr/local/lib/python3.8/site-packages/torch_xla/distributed/xla_multiprocessing.py", line 331, in _mp_start_fn

_start_fn(index, pf_cfg, fn, args)

File "/usr/local/lib/python3.8/site-packages/torch_xla/utils/utils.py", line 32, in value

self._value = self._gen_fn()

File "/usr/local/lib/python3.8/site-packages/torch_xla/distributed/xla_multiprocessing.py", line 324, in _start_fn

_setup_replication()

File "/usr/local/lib/python3.8/site-packages/torch_xla/core/xla_model.py", line 21, in

_DEVICES = xu.LazyProperty(lambda: torch_xla._XLAC._xla_get_devices())

File "/usr/local/lib/python3.8/site-packages/torch_xla/distributed/xla_multiprocessing.py", line 316, in setup_replication

device = xm.xla_device()

RuntimeError: tensorflow/compiler/xla/xla_client/mesh_service.cc:329 : Check failed: impl->channel->WaitForConnected( std::chrono::system_clock::now() + std::chrono::seconds(connect_wait_seconds))

*** Begin stack trace ***

tsl::CurrentStackTrace()

xla::service::MeshClient::MeshClient(std::string const&)

xla::service::MeshClient::Get()

xla::ComputationClient::Create()

xla::ComputationClient::Get()

PyCFunction_Call

_PyObject_MakeTpCall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyObject_GenericGetAttrWithDict

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

PyVectorcall_Call

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

PyVectorcall_Call

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyObject_FastCallDict

_PyObject_Call_Prepend

_PyObject_MakeTpCall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

PyEval_EvalCodeEx

PyEval_EvalCode

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

PyVectorcall_Call

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyEval_EvalFrameDefault

_PyObject_MakeTpCall

PyVectorcall_Call

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

PyEval_EvalCodeEx

PyEval_EvalCode

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

_PyEval_EvalFrameDefault

_PyEval_EvalCodeWithName

_PyFunction_Vectorcall

PyVectorcall_Call

Py_RunMain

Py_BytesMain

*** End stack trace ***

Failed to connect to client mesh master: 785db6c3ea80:57619

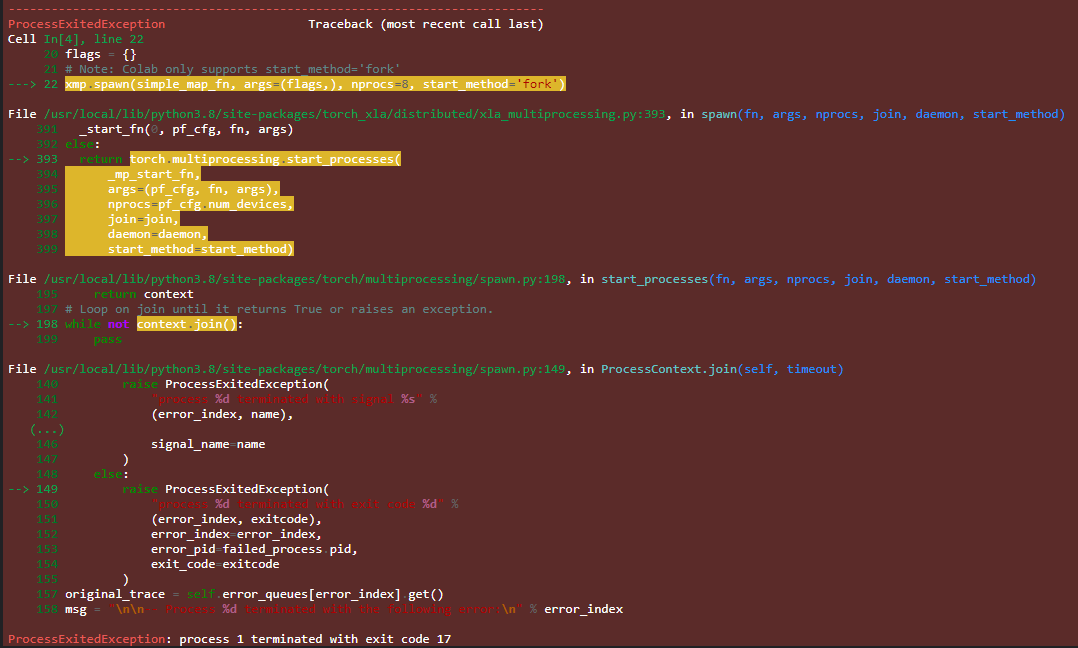

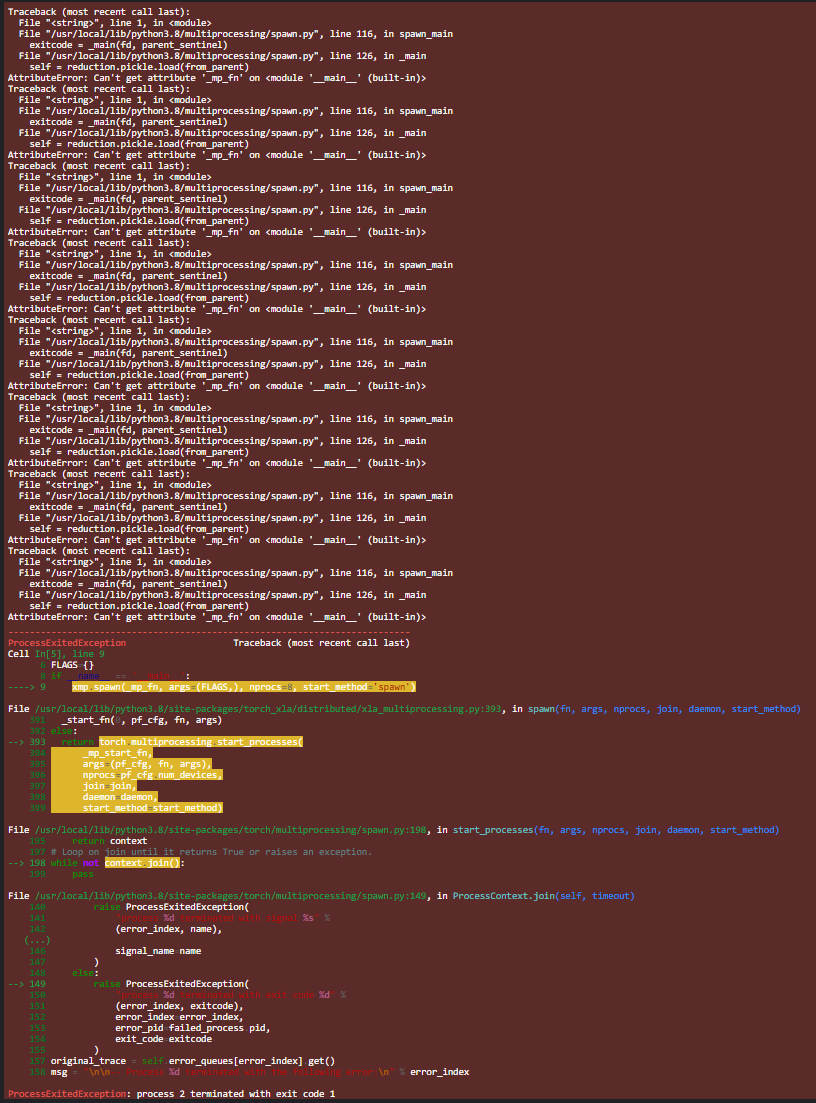

ProcessExitedException Traceback (most recent call last)

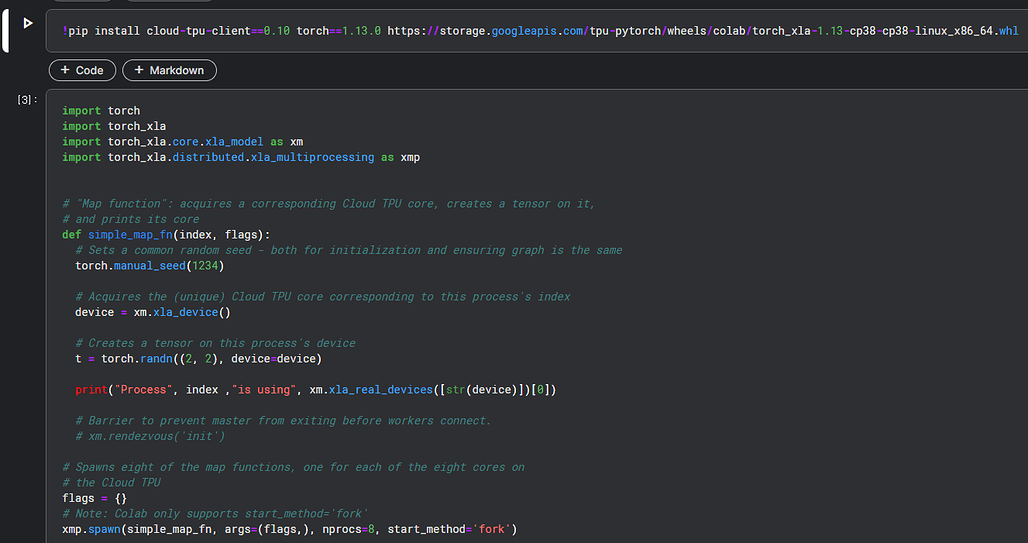

Cell In[4], line 22

20 flags = {}

21 # Note: Colab only supports start_method='fork'

---> 22 xmp.spawn(simple_map_fn, args=(flags,), nprocs=8, start_method='fork')

File /usr/local/lib/python3.8/site-packages/torch_xla/distributed/xla_multiprocessing.py:393, in spawn(fn, args, nprocs, join, daemon, start_method)

391 _start_fn(0, pf_cfg, fn, args)

392 else:

--> 393 return torch.multiprocessing.start_processes(

394 _mp_start_fn,

395 args=(pf_cfg, fn, args),

396 nprocs=pf_cfg.num_devices,

397 join=join,

398 daemon=daemon,

399 start_method=start_method)

File /usr/local/lib/python3.8/site-packages/torch/multiprocessing/spawn.py:198, in start_processes(fn, args, nprocs, join, daemon, start_method)

195 return context

197 # Loop on join until it returns True or raises an exception.

--> 198 while not context.join():

199 pass

File /usr/local/lib/python3.8/site-packages/torch/multiprocessing/spawn.py:149, in ProcessContext.join(self, timeout)

140 raise ProcessExitedException(

141 "process %d terminated with signal s"

142 (error_index, name),

(...)

146 signal_name=name

147 )

148 else:

--> 149 raise ProcessExitedException(

150 "process %d terminated with exit code d"

151 (error_index, exitcode),

152 error_index=error_index,

153 error_pid=failed_process.pid,

154 exit_code=exitcode

155 )

157 original_trace = self.error_queues[error_index].get()

158 msg = "\n\n-- Process d terminated with the following error:\n" error_index

ProcessExitedException: process 1 terminated with exit code 17