GeekNews의 xguru님께 허락을 받고 GN에 올라온 글들 중에 AI 관련된 소식들을 공유하고 있습니다. ![]()

소개

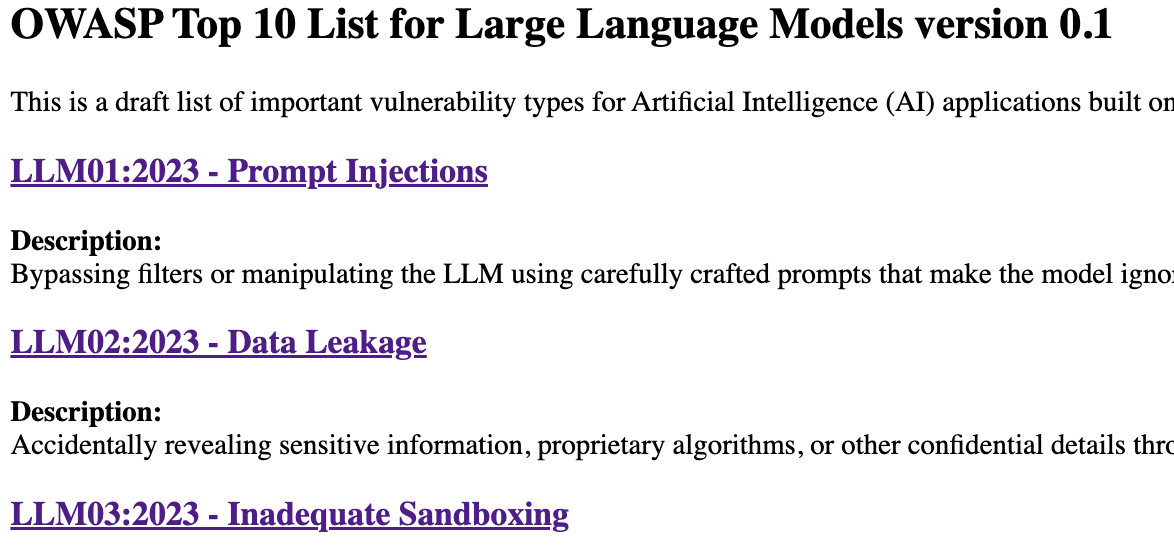

LLM의 취약점에 대한 설명, 방지법 등을 시나리오별로 설명

- LLM01:2023 - Prompt Injections

- LLM02:2023 - Data Leakage

- LLM03:2023 - Inadequate Sandboxing

- LLM04:2023 - Unauthorized Code Execution

- LLM05:2023 - SSRF Vulnerabilities

- LLM06:2023 - Overreliance on LLM-generated Content

- LLM07:2023 - Inadequate AI Alignment

- LLM08:2023 - Insufficient Access Controls

- LLM09:2023 - Improper Error Handling

- LLM10:2023 - Training Data Poisoning

원문

https://owasp.org/www-project-top-10-for-large-language-model-applications/descriptions/